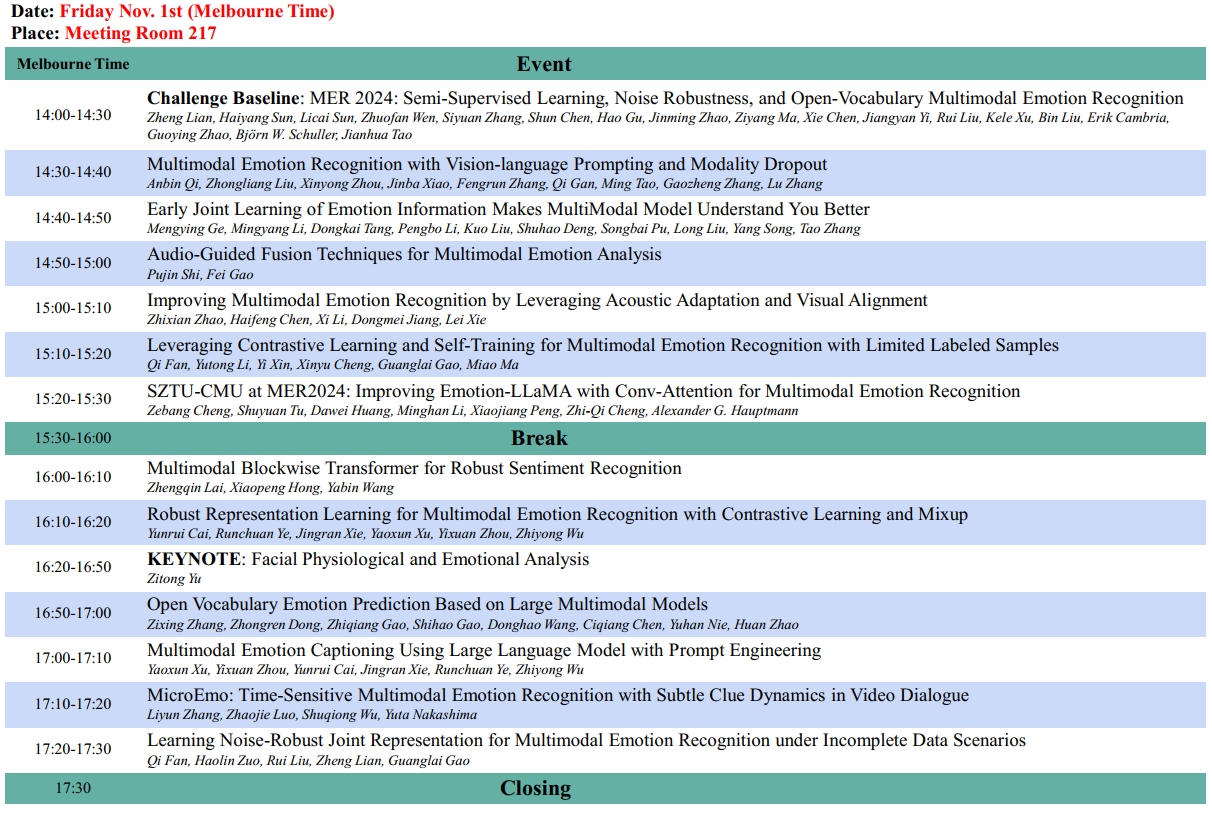

Compared with previous MER23, in this year, we enlarge the dataset size by including more labeled and unlabeled samples.

Meanwhile, besides MER-SEMI and MER-NOISE, we introduce a new track called MER-OV.

Track 1. MER-SEMI. It is difficult to collect large amounts of samples with emotion labels.

To address the problem of data sparseness, researchers revolve around unsupervised or semi-supervised learning and use unlabeled data during training.

Furthermore, MERBench [1] points out the necessity of using unlabeled data from the same domain as labeled data.

Therefore, we provide a large number of human-centric unlabeled videos in MER2024 and encourage participants

to explore more effective unsupervised or semi-supervised learning strategies for better performance.

Track 2. MER-Noise. Noise generally exists in the video.

It is hard to guarantee that every video is free of any audio noise and each frame is in high-resolution.

To improve noise robustness, researchers have carried out a series of works on emotion recognition under noise conditions.

However, there lacks a benchmark dataset to fairly compare different strategies.

Therefore, we organize a track around noise robustness.

Although there are many types of noise, we consider the two most common ones: audio additive noise and image blur noise.

We encourage participants to exploit data augmentation [2] or other techniques [3] to improve the noise robustness of emotion recognition systems.

Track 3. MER-OV. Emotions are subjective and ambiguous. To increase annotation consistency,

existing datasets typically limit the label space to a few discrete labels, employ multiple annotators, and use majority voting to select the most likely label.

However, this process may cause some correct but non-candidate or non-majority labels to be ignored, resulting in inaccurate annotations.

To this end, we introduce a new track on open-vocabulary emotion recognition.

We encourage participants to generate any number of labels in any category, trying to describe the emotional state accurately [4].

MathJax Example

Evaluation Metrics. For MER-SEMI and MER-NOISE,

we choose two widely used metrics in emotion recognition: accuracy and weighted average F-score (WAF).

Considering the inherent class imbalance, we choose WAF as the final ranking.

For MER-OV, we draw on our previous work [4], in which we extend traditional classification metrics (i.e., accuracy and recall) and define set-level accuracy and recall.

More details can be found in our baseline code.

Dataset. Please download the End User License Agreement,

fill it out and send it to merchallenge.contact@gmail.com to access the data.

We will review your application and get in touch as soon as possible.

EULA requires participants to use this dataset only for academic research and not to edit or upload samples to the Internet.

Result submission. For MER-SEMI and MER-NOISE, each team should submit the most likely discrete label among the 6 candidate labels

(i.e., worried, happy, neutral, angry, surprise, and sad ).

For MER-OV, each team can submit any number of labels in any category.

For three tracks, participants should predict results of 20,000 samples from 115,595 unlabeled data, although we only evaluate a small subset.

To focus on generalization performance rather than optimizing for a specific subset,

we do not provide information about which samples belong to the test subset.

For MER-OV, participants can only use open-source LLMs, MLLMs, or other models. Closed source models (such as GPT Series or Claude Series models) are not allowed.

CodaLab link for MER2024-SEMI: https://codalab.lisn.upsaclay.fr/competitions/19437

CodaLab link for MER2024-NOISE: https://codalab.lisn.upsaclay.fr/competitions/19438

CodaLab link for MER2024-OV: https://codalab.lisn.upsaclay.fr/competitions/19439

Note: please register on Codalab using the email provided on the EULA or the email where you sent the EULA.

The rankings of MER2024-SEMI and MER2024-NOISE are based on the CodaLab leaderboard.

But for MER2024-OV, CodaLab is only used for format check.

Each team can submit five times to the official email, the best performance among them used for final ranking.

Due to slightly randomness of GPT-3.5, we will run the evaluation code five times and report the average score.

The ranking of MER2024-OV track will be announced a few weeks after the competition ends.

Paper submission. All participants are encouraged to submit a paper describing their solution to the MRAC24 Workshop@ACM Multimedia.

Top-5 teams in each track MUST submit a paper.

Top-3 winning teams in each track will be awarded with a certificate.

Paper sumbission link: https://cmt3.research.microsoft.com/MRAC2024

Baseline paper: https://arxiv.org/abs/2404.17113

Baseline code: https://github.com/zeroQiaoba/MERTools/tree/master/MER2024

Contact email: merchallenge.contact@gmail.com

[1] Zheng Lian, Licai Sun, Yong Ren, Hao

Gu, Haiyang Sun, Lan Chen, Bin Liu, and Jianhua

Tao.

Merbench: A unified evaluation benchmark for multimodal emotion recognition.

arXiv preprint

arXiv:2401.03429, 2024.

[2] Devamanyu Hazarika, Yingting Li,

Bo Cheng, Shuai Zhao, Roger Zimmermann, and Soujanya Poria.

Analyzing modality robustness in multimodal sentiment analysis.

In Proceedings of the North American

Chapter of the Association for Computational Linguistics:

Human Language Technologies, pages 685–696, 2022.

[3] Zheng Lian, Lan Chen, Licai Sun, Bin

Liu, and Jianhua Tao.

Gcnet: Graph completion network for incomplete multimodal learning in conversation.

IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(07):8419–8432, 2023.

[4] Zheng Lian, Haiyang Sun, Licai Sun, Hao Gu, Zhuofan Wen, Siyuan Zhang, Shun Chen, Mingyu Xu, Ke Xu, Kang Chen, Lan Chen, Shan Liang, Ya Li, Jiangyan Yi, Bin Liu, and Jianhua Tao.

Explainable Multimodal Emotion Recognition.

arXiv preprint arXiv:2306.15401, 2023.

[5] Zheng Lian, Haiyang Sun, Licai Sun, Zhuofan Wen, Siyuan Zhang, Shun Chen, Hao Gu, Jinming Zhao, Ziyang Ma, Xie Chen, Jiangyan Yi, Rui Liu, Kele Xu, Bin Liu, Erik Cambria, Guoying Zhao, Björn W. Schuller, and Jianhua Tao.

MER 2024: Semi-Supervised Learning, Noise Robustness, and Open-Vocabulary Multimodal Emotion Recognition.

arXiv preprint arXiv:2404.17113, 2024.